Embarrassingly parallel Workloads

Contents

Live Notebook

You can run this notebook in a live session

Embarrassingly parallel Workloads¶

This notebook shows how to use Dask to parallelize embarrassingly parallel workloads where you want to apply one function to many pieces of data independently. It will show three different ways of doing this with Dask:

This example focuses on using Dask for building large embarrassingly parallel computation as often seen in scientific communities and on High Performance Computing facilities, for example with Monte Carlo methods. This kind of simulation assume the following:

We have a function that runs a heavy computation given some parameters.

We need to compute this function on many different input parameters, each function call being independent.

We want to gather all the results in one place for further analysis.

Start Dask Client for Dashboard¶

Starting the Dask Client will provide a dashboard which is useful to gain insight on the computation. We will also need it for the Futures API part of this example. Moreover, as this kind of computation is often launched on super computer or in the Cloud, you will probably end up having to start a cluster and connect a client to scale. See dask-jobqueue, dask-kubernetes or dask-yarn for easy ways to achieve this on respectively an HPC, Cloud or Big Data infrastructure.

The link to the dashboard will become visible when you create the client below. We recommend having it open on one side of your screen while using your notebook on the other side. This can take some effort to arrange your windows, but seeing them both at the same time is very useful when learning.

[1]:

from dask.distributed import Client, progress

client = Client(threads_per_worker=4, n_workers=1)

client

[1]:

Client

Client-6c01af35-0de0-11ed-9e28-000d3a8f7959

| Connection method: Cluster object | Cluster type: distributed.LocalCluster |

| Dashboard: http://127.0.0.1:8787/status |

Cluster Info

LocalCluster

6c9279a2

| Dashboard: http://127.0.0.1:8787/status | Workers: 1 |

| Total threads: 4 | Total memory: 6.78 GiB |

| Status: running | Using processes: True |

Scheduler Info

Scheduler

Scheduler-859f8895-4dc5-45e4-945c-593b2390b28a

| Comm: tcp://127.0.0.1:46295 | Workers: 1 |

| Dashboard: http://127.0.0.1:8787/status | Total threads: 4 |

| Started: Just now | Total memory: 6.78 GiB |

Workers

Worker: 0

| Comm: tcp://127.0.0.1:33017 | Total threads: 4 |

| Dashboard: http://127.0.0.1:45931/status | Memory: 6.78 GiB |

| Nanny: tcp://127.0.0.1:46881 | |

| Local directory: /home/runner/work/dask-examples/dask-examples/applications/dask-worker-space/worker-ii4pk6hj | |

Define your computation calling function¶

This function does a simple operation: add all numbers of a list/array together, but it also sleeps for a random amount of time to simulate real work. In real use cases, this could call another python module, or even run an executable using subprocess module.

[2]:

import time

import random

def costly_simulation(list_param):

time.sleep(random.random())

return sum(list_param)

We try it locally below

[3]:

%time costly_simulation([1, 2, 3, 4])

CPU times: user 2.24 ms, sys: 1.62 ms, total: 3.85 ms

Wall time: 146 ms

[3]:

10

Define the set of input parameters to call the function¶

We will generate a set of inputs on which we want to run our simulation function. Here we use Pandas dataframe, but we could also use a simple list. Lets say that our simulation is run with four parameters called param_[a-d].

[4]:

import pandas as pd

import numpy as np

input_params = pd.DataFrame(np.random.random(size=(500, 4)),

columns=['param_a', 'param_b', 'param_c', 'param_d'])

input_params.head()

[4]:

| param_a | param_b | param_c | param_d | |

|---|---|---|---|---|

| 0 | 0.312124 | 0.071036 | 0.799919 | 0.766664 |

| 1 | 0.956577 | 0.471111 | 0.554442 | 0.263298 |

| 2 | 0.583202 | 0.789435 | 0.135610 | 0.622723 |

| 3 | 0.990032 | 0.253090 | 0.225035 | 0.190979 |

| 4 | 0.130992 | 0.303275 | 0.514555 | 0.420719 |

Without using Dask, we could call our simulation on all of these parameters using normal Python for loops.

Let’s only do this on a sample of our parameters as it would be quite long otherwise.

[5]:

results = []

[6]:

%%time

for parameters in input_params.values[:10]:

result = costly_simulation(parameters)

results.append(result)

CPU times: user 123 ms, sys: 15.1 ms, total: 138 ms

Wall time: 4.25 s

[7]:

results

[7]:

[1.9497434903818136,

2.245428056748925,

2.1309710532899184,

1.65913554017504,

1.3695413820396394,

1.5063310042417761,

1.2444777543824386,

1.531794781158632,

1.3147033688719252,

2.925532773375028]

Note that this is not very clever as we can easily parallelize code.

There are many ways to parallelize this function in Python with libraries like multiprocessing, concurrent.futures, joblib or others. These are good first steps. Dask is a good second step, especially when you want to scale across many machines.

Use Dask Delayed to make our function lazy¶

We can call dask.delayed on our funtion to make it lazy. Rather than compute its result immediately, it records what we want to compute as a task into a graph that we’ll run later on parallel hardware. Using dask.delayed is a relatively straightforward way to parallelize an existing code base, even if the computation isn’t embarrassingly parallel like this one.

Calling these lazy functions is now almost free. In the cell below we only construct a simple graph.

[8]:

import dask

lazy_results = []

[9]:

%%time

for parameters in input_params.values[:10]:

lazy_result = dask.delayed(costly_simulation)(parameters)

lazy_results.append(lazy_result)

CPU times: user 1.43 ms, sys: 0 ns, total: 1.43 ms

Wall time: 1.08 ms

[10]:

lazy_results[0]

[10]:

Delayed('costly_simulation-ae2bb390-5058-4db9-a033-6498fb822270')

Run in parallel¶

The lazy_results list contains information about ten calls to costly_simulation that have not yet been run. Call .compute() when you want your result as normal Python objects.

If you started Client() above then you may want to watch the status page during computation.

[11]:

%time dask.compute(*lazy_results)

CPU times: user 261 ms, sys: 43.6 ms, total: 304 ms

Wall time: 1.44 s

[11]:

(1.9497434903818136,

2.245428056748925,

2.1309710532899184,

1.65913554017504,

1.3695413820396394,

1.5063310042417761,

1.2444777543824386,

1.531794781158632,

1.3147033688719252,

2.925532773375028)

Notice that this was faster than running these same computations sequentially with a for loop.

We can now run this on all of our input parameters:

[12]:

import dask

lazy_results = []

for parameters in input_params.values:

lazy_result = dask.delayed(costly_simulation)(parameters)

lazy_results.append(lazy_result)

futures = dask.persist(*lazy_results) # trigger computation in the background

To make this go faster, we can add additional workers.

(although we’re still only working on our local machine, this is more practical when using an actual cluster)

[13]:

client.cluster.scale(10) # ask for ten 4-thread workers

By looking at the Dask dashboard we can see that Dask spreads this work around our cluster, managing load balancing, dependencies, etc..

Then get the result:

[14]:

results = dask.compute(*futures)

results[:5]

[14]:

(1.9497434903818136,

2.245428056748925,

2.1309710532899184,

1.65913554017504,

1.3695413820396394)

Using the Futures API¶

The same example can be implemented using Dask’s Futures API by using the client object itself. For our use case of applying a function across many inputs both Dask delayed and Dask Futures are equally useful. The Futures API is a little bit different because it starts work immediately rather than being completely lazy.

For example, notice that work starts immediately in the cell below as we submit work to the cluster:

[15]:

futures = []

for parameters in input_params.values:

future = client.submit(costly_simulation, parameters)

futures.append(future)

We can explicitly wait until this work is done and gather the results to our local process by calling client.gather:

[16]:

results = client.gather(futures)

results[:5]

[16]:

[1.9497434903818136,

2.245428056748925,

2.1309710532899184,

1.65913554017504,

1.3695413820396394]

But the code above can be run in fewer lines with client.map() function, allowing to call a given function on a list of parameters.

As for delayed, we can only start the computation and not wait for results by not calling client.gather() right now.

It shall be noted that as Dask cluster has already performed tasks launching costly_simulation with Futures API on the given input parameters, the call to client.map() won’t actually trigger any computation, and just retrieve already computed results.

[17]:

futures = client.map(costly_simulation, input_params.values)

Then just get the results later:

[18]:

results = client.gather(futures)

len(results)

[18]:

500

[19]:

print(results[0])

1.9497434903818136

We encourage you to watch the dashboard’s status page to watch on going computation.

Doing some analysis on the results¶

One of the interests of Dask here, outside from API simplicity, is that you are able to gather the result for all your simulations in one call. There is no need to implement a complex mechanism or to write individual results in a shared file system or object store.

Just get your result, and do some computation.

Here, we will just get the results and expand our initial dataframe to have a nice view of parameters vs results for our computation

[20]:

output = input_params.copy()

output['result'] = pd.Series(results, index=output.index)

output.sample(5)

[20]:

| param_a | param_b | param_c | param_d | result | |

|---|---|---|---|---|---|

| 94 | 0.398214 | 0.472349 | 0.366929 | 0.822720 | 2.060212 |

| 114 | 0.808715 | 0.863210 | 0.818088 | 0.396112 | 2.886125 |

| 342 | 0.072536 | 0.814248 | 0.309066 | 0.631732 | 1.827582 |

| 427 | 0.822234 | 0.947608 | 0.423060 | 0.200821 | 2.393723 |

| 322 | 0.358192 | 0.228315 | 0.864903 | 0.195684 | 1.647095 |

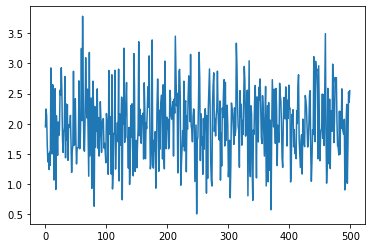

Then we can do some nice statistical plots or save result locally with pandas interface here

[21]:

%matplotlib inline

output['result'].plot()

[21]:

<AxesSubplot:>

[22]:

output['result'].mean()

[22]:

1.9844499213466507

[23]:

filtered_output = output[output['result'] > 2]

print(len(filtered_output))

filtered_output.to_csv('/tmp/simulation_result.csv')

244

Handling very large simulation with Bags¶

The methods above work well for a size of input parameters up to about 100,000. Above that, the Dask scheduler has trouble handling the amount of tasks to schedule to workers. The solution to this problem is to bundle many parameters into a single task. You could do this either by making a new function that operated on a batch of parameters and using the delayed or futures APIs on that function. You could also use the Dask Bag API. This is described more in the documentation about avoiding too many tasks.

Dask Bags hold onto large sequences in a few partitions. We can convert our input_params sequence into a dask.bag collection, asking for fewer partitions (so at most 100,000, which is already huge), and apply our function on every item of the bag.

[24]:

import dask.bag as db

b = db.from_sequence(list(input_params.values), npartitions=100)

b = b.map(costly_simulation)

[25]:

%time results_bag = b.compute()

CPU times: user 926 ms, sys: 121 ms, total: 1.05 s

Wall time: 7.79 s

Looking on Dashboard here, you should see only 100 tasks to run instead of 500, each taking 5x more time in average, because each one is actually calling our function 5 times.

[26]:

np.all(results) == np.all(results_bag)

[26]:

True